Section: 16 🔖 Predictions with rpart

- Lecture slides:

Decision trees are one of the most powerful and popular tools for classification and prediction. The reason decision trees are very popular is that they can generate rules which are easier to understand as compared to other models. They require much less computations for performing modeling and prediction. Both continuous/numerical and categorical variables are handled easily while creating the decision trees.

16.1 Use of Rpart

Recursive Partitioning and Regression Tree RPART library is a collection of routines which implements a Decision Tree.The resulting model can be represented as a binary tree.

The library associated with this RPART is called rpart. Install this library using install.packages("rpart").

Syntax for building the decision tree using rpart():

rpart( formula , method, data, control,...)- formula: here we mention the prediction column and the other related columns(predictors) on which the prediction will be based on.

prediction ~ predictor1 + predictor2 + predictor3 + ...

- method: here we describe the type of decision tree we want. If nothing is provided, the function makes an intelligent guess. We can use “anova” for regression, “class” for classification, etc.

- data: here we provide the dataset on which we want to fit the decision tree on.

- control: here we provide the control parameters for the decision tree. Explained more in detail in the section further in this chapter.

- formula: here we mention the prediction column and the other related columns(predictors) on which the prediction will be based on.

For more info on the rpart function visit rpart documentation

Lets look at an example on the Moody 2022 dataset.

- We will use the rpart() function with the following inputs:

- prediction -> GRADE

- predictors -> SCORE, DOZES_OFF, TEXTING_IN_CLASS, PARTICIPATION

- data -> moody dataset

- method -> “class” for classification.

16.1.1 Snippet 1

We can see that the output of the rpart() function is the decision tree with details of,

- node -> node number

- split -> split conditions/tests

- n -> number of records in either branch i.e. subset

- yval -> output value i.e. the target predicted value.

- yprob -> probability of obtaining a particular category as the predicted output.

Using the output tree, we can use the predict function to predict the grades of the test data. We will look at this process later in section 16.5

But coming back to the output of the rpart() function, the text type output is useful but difficult to read and understand, right! We will look at visualizing the decision tree in the next section.

16.2 Visualize the Decision tree

To visualize and understand the rpart() tree output in the easiest way possible, we use a library called rpart.plot. The function rpart.plot() of the rpart.plot library is the function used to visualize decision trees.

NOTE: The online runnable code block does not support rpart.plot library and functions, thus the output of the following code examples are provided directly.

16.2.1 Snippet 2

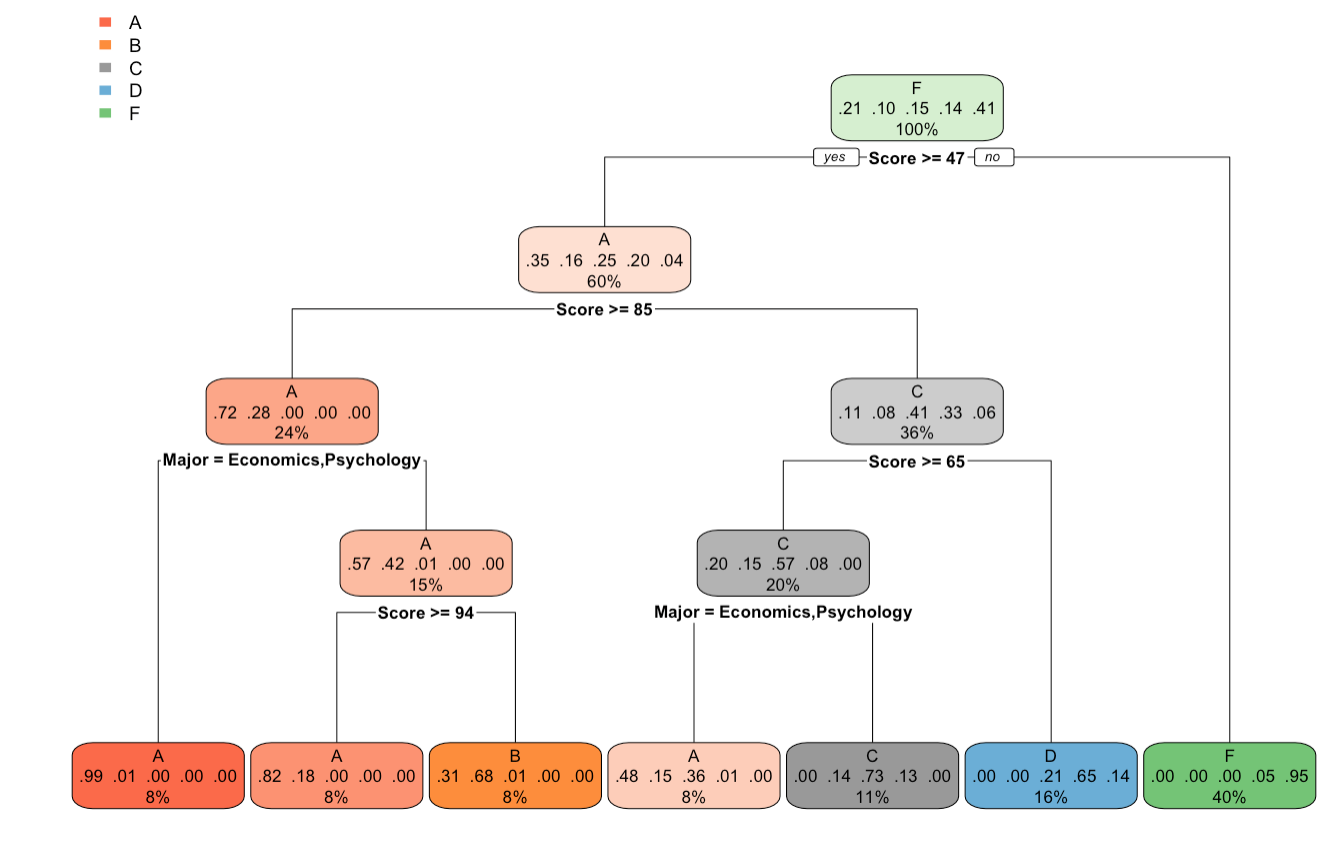

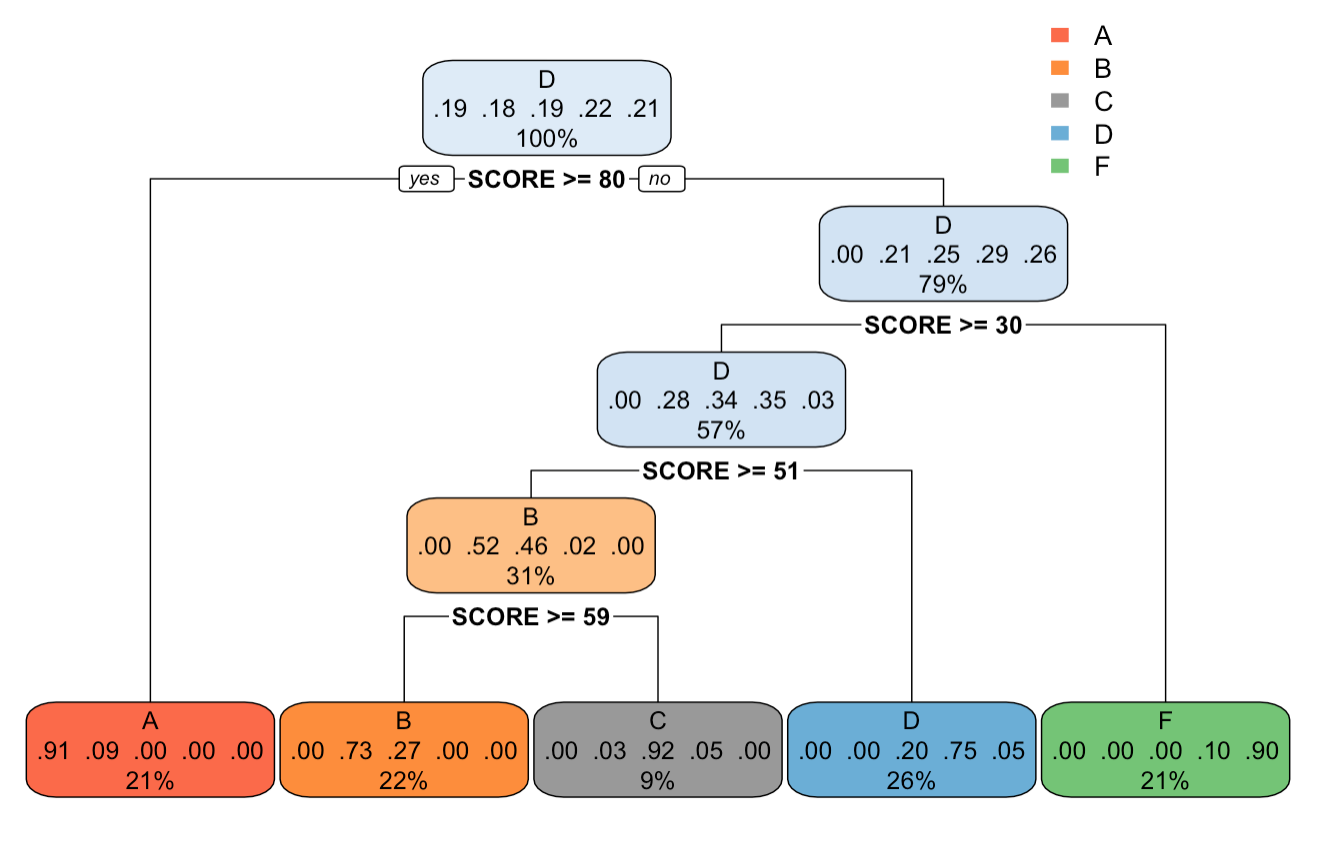

Output Plot of rpart.plot() function

We can see that after plotting the tree using rpart.plot() function, the tree is more readable and provides better information about the splitting conditions, and the probability of outcomes. Each leaf node has information about

- the grade category.

- the outcome probability of each grade category.

- the records percentage out of total records.

To study more in detail the arguments that can be passed to the rpart.plot() function, please look at these guides rpart.plot and Plotting with rpart.plot (PDF)

| NOTE: In this chapter, from this point forward, the rpart.plots() generated in any example below will be shown as images, and also the code to generate those rpart.plots will be commented in the interactive code blocks. If you want to generate these plots yourself, please use a local Rstudio or R environment. |

16.3 Rpart Control

Now let’s look at the rpart.control() function used to pass the control parameters to the control argument of the rpart() function.

rpart.control( *minsplit*, *minbucket*, *cp*,...)- minsplit: the minimum number of observations that must exist in a node in order for a split to be attempted. For example, minsplit=500 -> the minimum number of observations in a node must be 500 or up, in order to perform the split at the testing condition.

- minbucket: minimum number of observations in any terminal(leaf) node. For example, minbucket=500 -> the minimum number of observation in the terminal/leaf node of the trees must be 500 or above.

- cp: complexity parameter. Using this informs the program that any split which does not increase the accuracy of the fit by cp, will not be made in the tree.

For more information of the other arguments of the rpart.control() function visit rpart.control

Let look at few examples.

Suppose you want to set the control parameter minsplit=200.

16.3.1 Snippet 3: Minsplit = 200

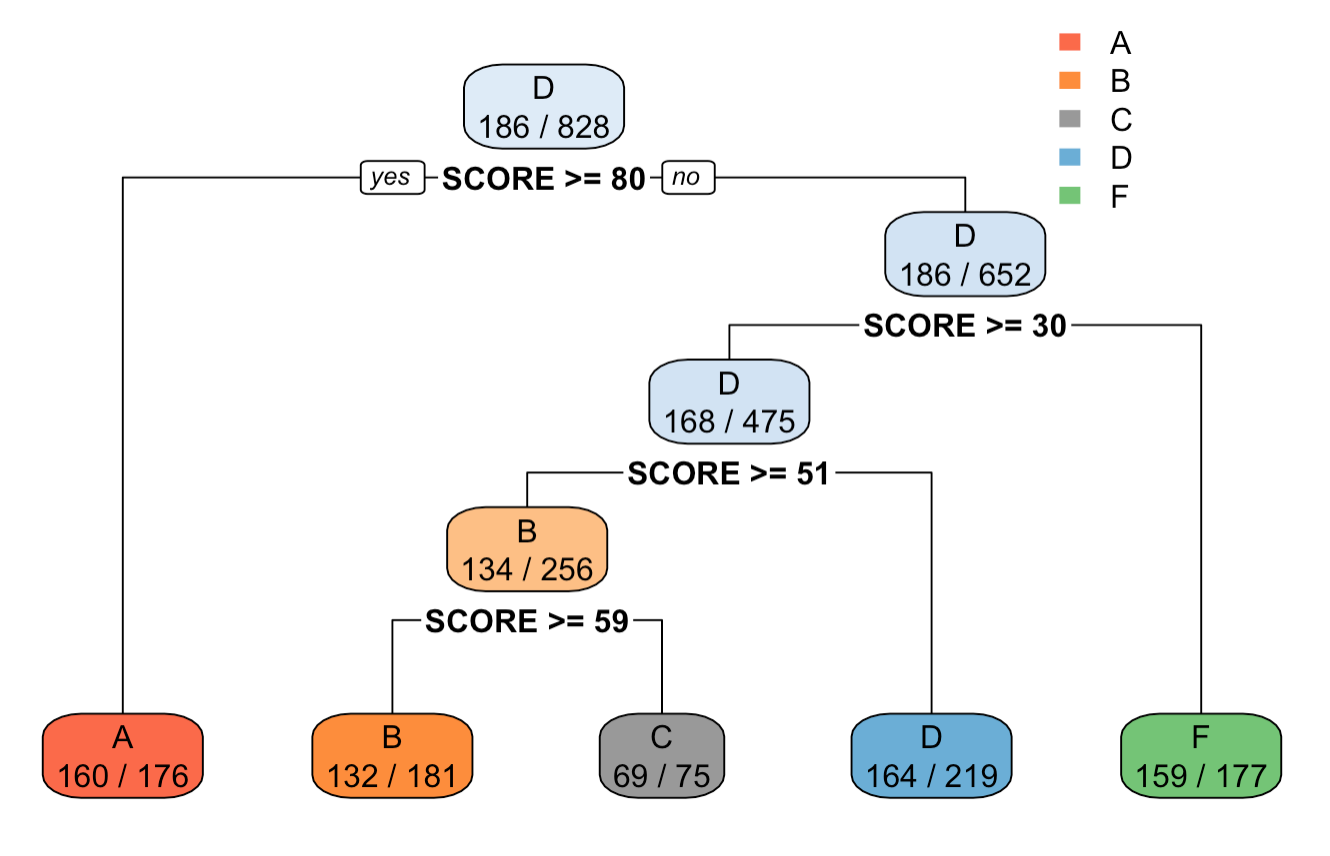

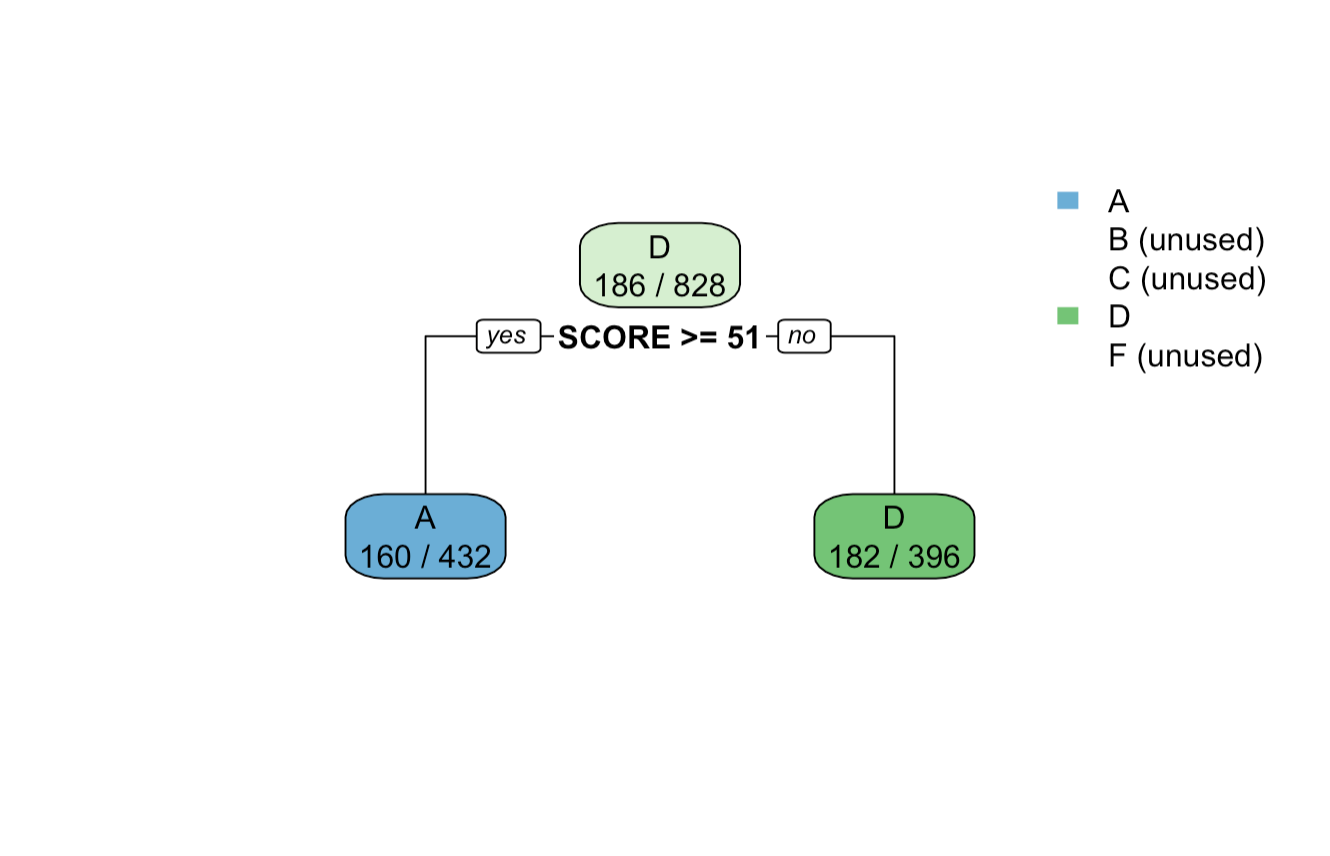

Output tree plot of after setting minsplit=200 in rpart.control() function

16.3.2 Snippet 4: Minsplit = 100

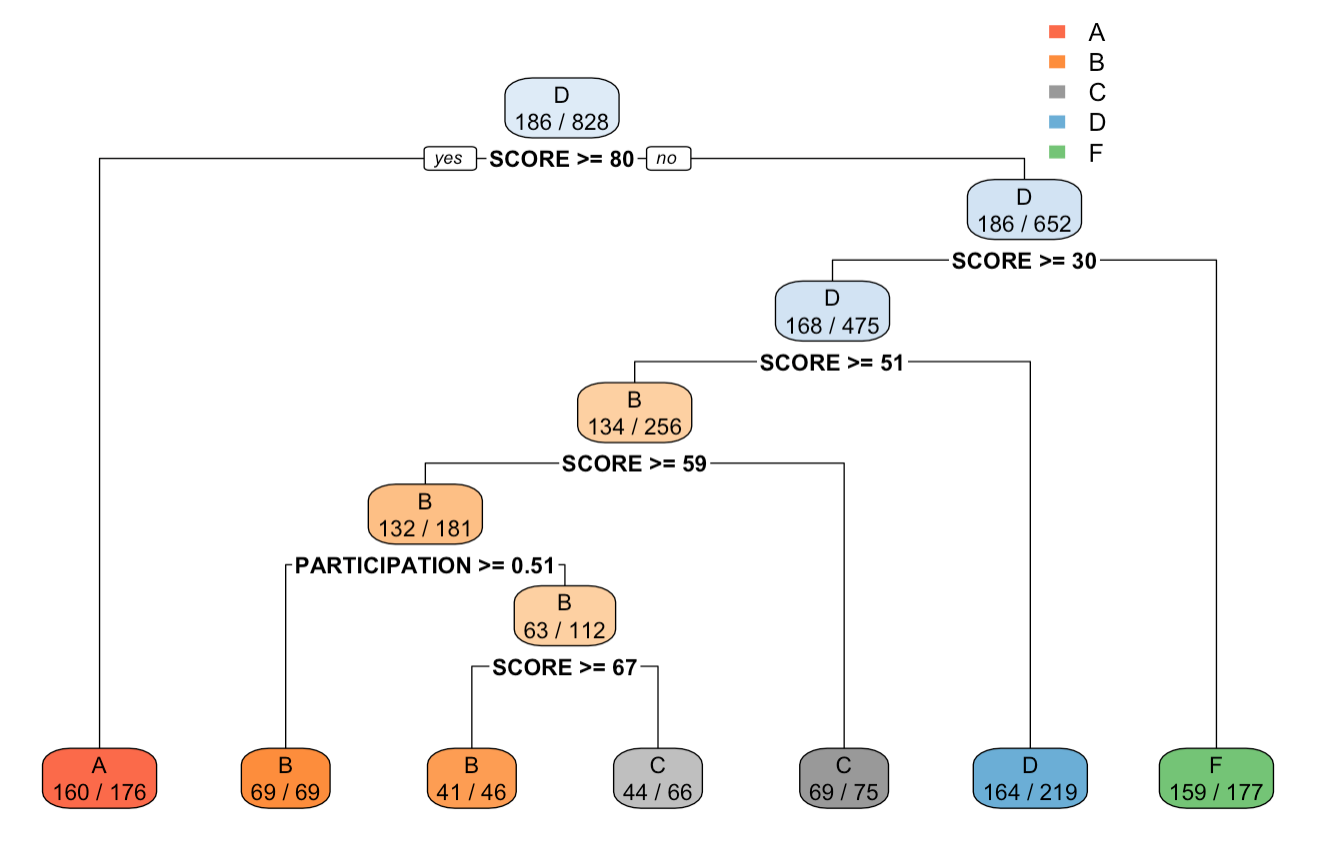

Output tree plot of after setting minsplit=100 in rpart.control() function

We can see from the output of tree$splits and the tree plot, that at each split the total amount of observations are above 200 and 100. Also, in comparison to the tree without control, the tree with control has lower height, and lesser count of splits.

Now, lets set the minbucket parameter to 100, and see how that affects the tree parameters.

16.3.3 Snippet 5: Minbucket = 100

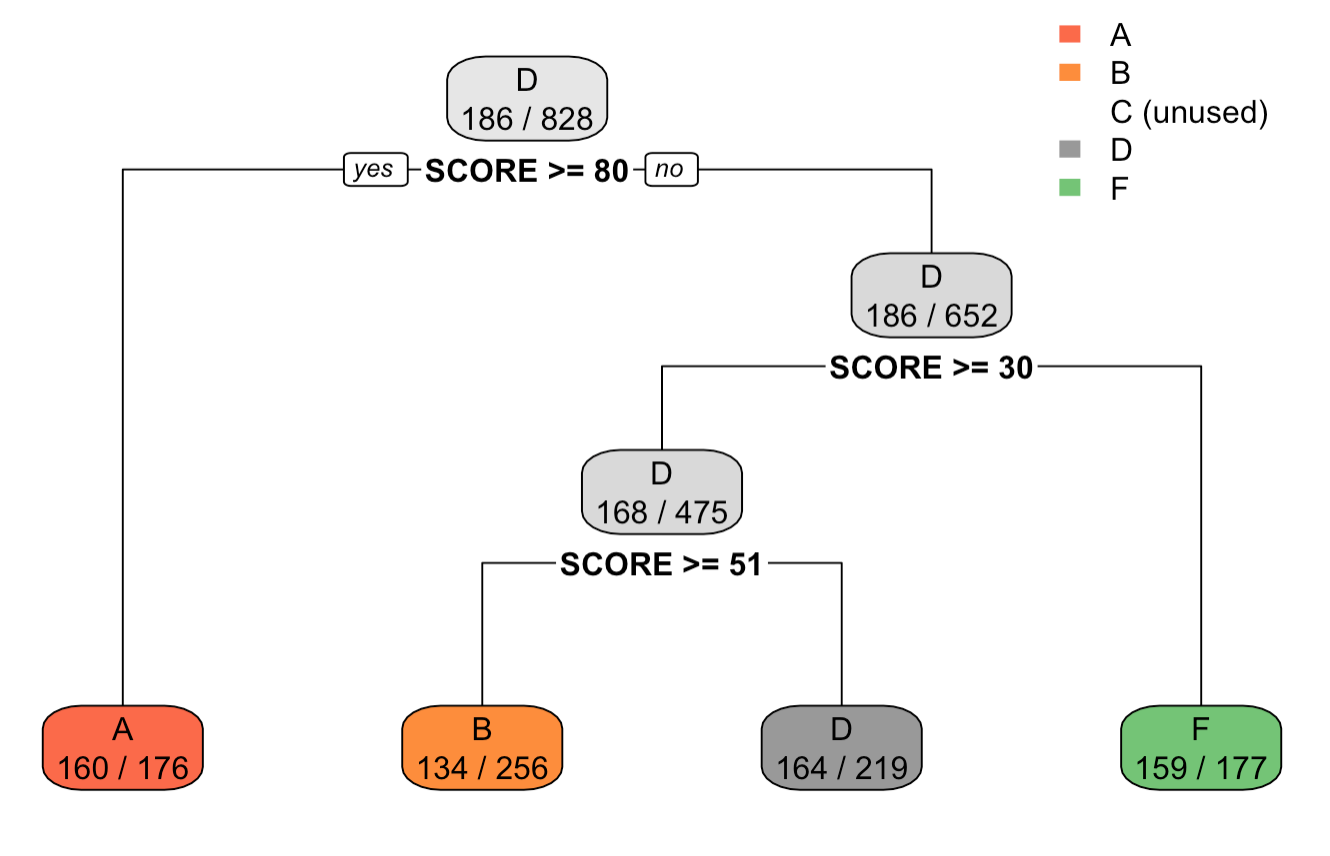

Output tree plot of after setting minbucket=100 in rpart.control() function

We can see for the output and the tree plot, that the count of observations in each leaf node is greater than 100. Also, the tree height has shortened, suggesting that the control method was able to shorten the tree size.

16.3.4 Snippet 6: Minbucket = 200

Output tree plot of after setting minbucket=200 in rpart.control() function

We can see for the output and the tree plot, that the count of observations in each leaf node is greater than 200. Also, the tree height has shortened, suggesting that the control method was able to shorten the tree size.

Lets now use the cp parameter and see its effect on the tree.

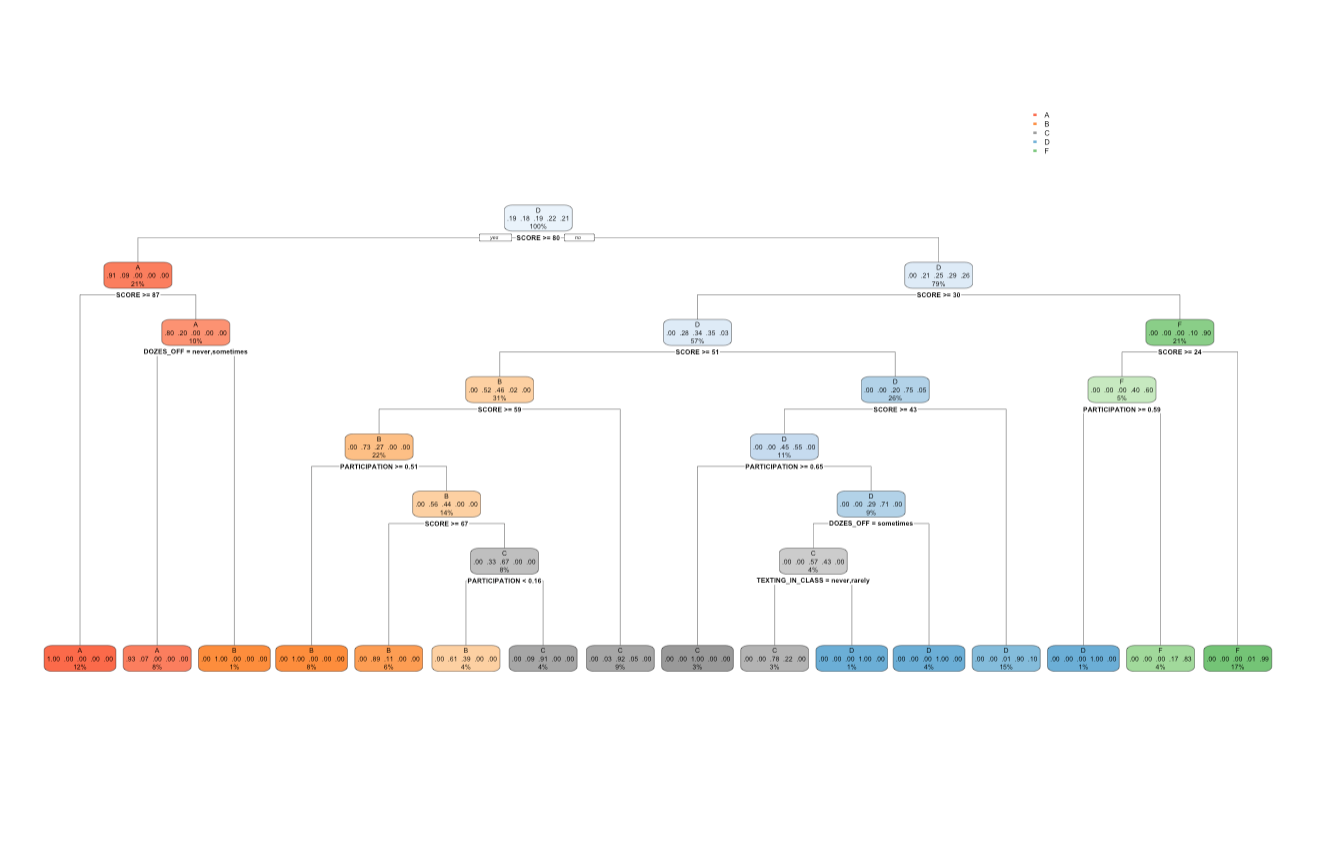

16.3.5 Snippet 7: cp = 0.05

Output tree plot of after setting cp=0.05 in rpart.control() function

16.3.6 Snippet 8: cp = 0.005

We can see for the output and the tree plot, that the tree size has increased, with increase in number of splits, and leaf nodes. Also we can see that the minimum CP value in the output is 0.005.

We can see for the output and the tree plot, that the tree size has increased, with increase in number of splits, and leaf nodes. Also we can see that the minimum CP value in the output is 0.005.

16.4 Cross Validation

Overfitting takes place when you have a high accuracy on training dataset, but a low accuracy on the test dataset. But how do you know whether you are overfitting or not? Especially since you cannot determine accuracy on the test dataset? That is where cross-validation comes into play.

Because we cannot determine accuracy on test dataset, we partition our training dataset into train and validation (testing). We train our model (rpart or lm) on train partition and test on the validation partition. The partition is defined by split ratio. If split ratio =0.7, 70% of the training dataset will be used for the actual training of your model (rpart or lm), and 30 % will be used for validation (or testing). The accuracy of this validation data is called cross-validation accuracy.

To know if you are overfitting or not, compare the training accuracy with the cross-validation accuracy. If your training accuracy is high, and cross-validation accuracy is low, that means you are overfitting.

cross_validate(*data*, *tree*, *n_iter*, *split_ratio*, *method*)- data: The dataset on which cross validation is to be performed.

- tree: The decision tree generated using rpart.

- n_iter: Number of iterations.

- split_ratio: The splitting ratio of the data into train data and validation data.

- method: Method of the prediction. “class” for classification.

The way the function works is as follows:

- It randomly partitions your data into training and validation.

- It then constructs the following two decision trees on training partition:

- The tree that you pass to the function.

- The tree is constructed on all attributes as predictors and with no control parameters. -It then determines the accuracy of the two trees on validation partition and returns you the accuracy values for both the trees.

The values in the first column(accuracy_subset) returned by cross-validation function are more important when it comes to detecting overfitting. If these values are much lower than the training accuracy you get, that means you are overfitting.

We would also want the values in accuracy_subset to be close to each other (in other words, have low variance). If the values are quite different from each other, that means your model (or tree) has a high variance which is not desired.

The second column(accuracy_all) tells you what happens if you construct a tree based on all attributes. If these values are larger than accuracy_subset, that means you are probably leaving out attributes from your tree that are relevant.

Each iteration of cross-validation creates a different random partition of train and validation, and so you have possibly different accuracy values for every iteration.

Let’s look at the cross_validate() function in action in the example below.

We will pass the tree with formula as GRADE ~ SCORE+DOZES_OFF+TEXTING_IN_CLASS+PARTICIPATION, and control parameter, with minsplit=100.

And for cross_validate() function, we will usen_iter=5, and split_raitio=0.7

| NOTE: Cross-Validation repository is already preloaded for the following interactive code block. Thus you can directly use the cross_validate() function in the following interactive code block. But if you wish to use the code_validate() function locally, please use |

install.packages("devtools")

devtools::install_github("devanshagr/CrossValidation")

CrossValidation::cross_validate()16.4.1 Snippet 9

You can see that the cross-validation accuracies for the tree that was passed (accuracy_subset) are fairly high and close to our training accuracy of 84%. This means we are not overfitting. Also observe that accuracy_subset and accuracy_all have the same values, which means that the only relevant attributes are score and participation, and adding more attributes doesn’t make any difference to the tree. Finally, the values in accuracy_subset are reasonably close to each other, which mean low variance.

16.5 Prediction using rpart.

Now that we have seen the process to create a decision tree and also plot it, we will like to use the output tree to predict the required attribute.

From the moody example, we are trying to predict the grade of students. Lets look at the predict() function to predict the outcomes.

predict(*object*,*data*,*type*,...)- object: the generated tree from the rpart function.

- data: the data on which the prediction is to be performed.

- type: the type of prediction required. One of “vector”, “prob”, “class” or “matrix”.

Now lets use the predict function to predict the grades of students using the tree generated on the Moody dataset.